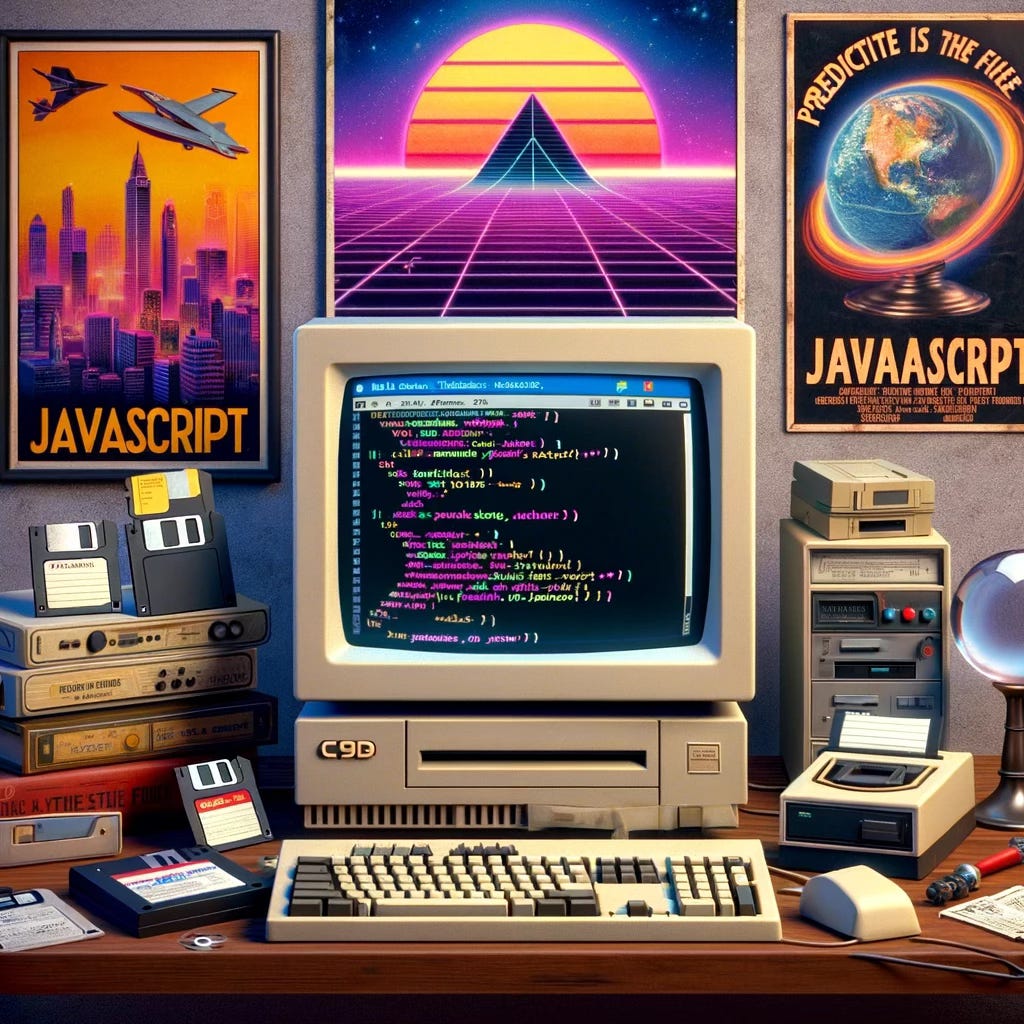

Does this 90s-era theory predict the popularity of Javascript?

This is part of a series of posts I’m writing as I work my way through older books about programming.

In 1996, Richard Gabriel proposed a theory that explains the popularity of programming languages in his book Patterns of Software. Some of these will seem dated, and some will seem obvious, but when you put them together over 20 years later, I think it does a decent job of predicting the popularity of Javascript.

The theory has a few parts:

Languages are accepted and evolve by a social process, not a technical or technological one

Successful languages must have modest or minimal computer resource requirements

Successful languages must have a simple performance model

Successful languages must not require users to have "mathematical sophistication"

Let's compare Javascript to Ruby and test this theory's explanatory power.

As we progress, I encourage you to think about your favorite language in these four dimensions. Some that come to mind for me include Objective-C and Swift, Java, Erlang and Elixir, Rust, Kotlin, Clojure, and Go.

Ruby

I love Ruby. I think in Ruby. I've built my career (so far, wisely or not) upon it. There is even a renaissance happening at the moment, where some are turning away from the monstrous complexity of other ecosystems back to the Ruby and Rails community for its pure productivity.

But I think we must agree that it is still far less popular than Javascript.

I am not here to start (or, resume) a religious war. In fact I quite like the Javascript language. Years ago I read a Self tutorial - a language inspired by Smalltalk, with a far simpler programming model and a cool concept called slots. Javascript was heavily inspired by self, replacing classes and methods with the simpler objects and attributes model. Understanding the historical lineage of these languages is a lot of fun and does a lot to reduce the perceived conflict between the various communities, I’ve found.

But I digress.

Languages evolve by a social process

There are a few sub-parts to this criterion, aka how do languages get accepted?

It must be available on a wide variety of hardware

It helps to have local wizards or gurus

It must be a minimally acceptable language

It must be similar to existing popular languages

Javascript and Ruby are now ubiquitous, but there is no platform as ubiquitous as a web browser. Case closed.

Having "local wizards or gurus" is a dated reference, although there do exist many meetups for both languages and many others. Are there more javascript meetups than ruby meetups? Has the web made this criteria obsolete? You be the judge.

Javascript was accepted because it was the only choice if you wanted to build functionality into your browser application. It was instantly accessible - nothing to install. Ruby was more traditional - download the runtime to the machine and run your code on it. Being an interpreted language on a virtual machine, it doesn't have a compile step like other languages but it does still have a higher barrier to entry than Javascript.

Ruby's popularity grew because people loved it and shared it. People loved it because it let them write programs more productively, without having to bend their minds around the implementation of the machine. If you hang out in the ruby community, you'll often hear people talk about how much Ruby makes them love programming. There were real reasons to try out Ruby and real reasons to stick around. It made programming easier and more fun for many.

Javascript's syntax is extremely similar to other languages. It's a simula language, like C, Java, and countless others. Curly braces, function definitions, expressions and return statements, imports, etc are instantly recognizable in their syntax if you arrive from any number of other Simula syntaxed languages.

Ruby, on the other hand, is Smalltalk inspired, and thus departs a bit from that lineage. Curly braces are rare (mostly for inline blocks), semicolons are practically nonexistent, return statements are implicit rather than explicit, function definitions have more in common with BASIC or Python than C or Java.

Ruby is famous for taking its object model from Smalltalk, perhaps most famously its object model (everything descends from Object) and its funky block syntax for deferred lambdas. It looks funky if you have never seen Smalltalk blocks before - which is most people. (Nowadays other languages have also adopted blocks, such as Swift).

(Note that I am not saying these things are negative aspects of Ruby. I love these parts of Ruby, and they are powerful. I am merely comparing the similarity of Javascript and Ruby to the other languages that came before them.)

Both Javascript and Ruby benefit from incremental improvement rather than giant releases. Ruby does occasionally introduce bigger changes - pattern matching is a recent example of this. An older example might be the shorthand Hash syntax. These are released as features in major versions, rather than incremental improvements. Comparable examples from Javascript would be the new class syntax released as part of ES2015.

In short, Javascript started as a simple language with many flaws, and over the course of its lifetime it has been incrementally improved a thousand-fold over its old self.

While Ruby has also benefited from incremental improvements, it was far more complete at its inception than Javascript. Which, according to the theory put forth by Richard Gabriel, is actually an obstacle to adoption, not a benefit!

For these reasons, while it's a close call, I think it's safe to put Javascript as the winner in the accessibility category.

Resource requirements

For a language to be successful, it must not be a resource hog.

I know what you're thinking. Isn't Ruby much slower than Javascript?

Well, it was when it started. And for our purposes today, that's really all that matters. Yes, Javascript used less memory and ran faster than an equivalent program in Ruby.

In the intervening years, the performance issues of Ruby for most web applications have been resolved. Ruby is plenty fast now.

But that doesn't change history, so we must acknowledge that when we compare the resource usage of the languages at their inception, Javascript wins.

The other thing Richard Gabriel points out here is:

the sophistication of a programming language is limited by the sophistication of the hardware, which may be high in terms of cleverness needed to achieve speed but is low in other ways to maintain speed.

I think this is an especially interesting tidbit, especially if you consider the browser on top of the OS to be the "machine" that Javascript runs on.

Mathematical sophistication

Programmers are not mathematicians...and I don't mean sophisticated mathematicians, just people who can think precisely andn rigorously in the way that mathematicians can.

This one is far more subjective, so I'll just say here that I think both Javascript and Ruby are roughly equivalent here. Both languages allow many, many developers to grasp the concepts required to be productive and write good software.

But, it is interesting to think about when compared to other languages - for example, monads in Haskell and macros in Lisp tend to be more difficult to grasp and require a subjective capability that appears to exceed the average. (Although, I'm not sure this is actually true. I think these concepts just require more study - but that's a different topic.)

Performance Model

I am not educated enough on the intricacies of Javascript to make an objective comparison here. I know that Ruby, being Smalltalk inspired, uses a sophisticated virtual machine to allow the programmer to, for many tasks, forget about space and time complexity. I believe the same is true of Javascript. Neither is as simple of a performance model as something like C, although I would bet money that Javascript's object model, being much simpler, allows its performance characteristics to also be simpler.

Does this explain the popularity of Javascript?

After discussing C vs Lisp on these various criteria, Richard Gabriel goes on to say:

The next interesting candidates are the object-oriented languages, Smalltalk and C++. Smalltalk, though designed for children to use, requires mathematical sophistication to understands its inheritance and message-passing model. Its performance model is much more complex than C's, not matching the computer all that well from a naive point of view. Smalltalk does not run on every computer, and in many ways it is a proprietary language, which means that it cannot follow the acceptance model.

Substitute Ruby for Smalltalk and see if this rings true. Now substitute Javascript. Does it ring as true?

What do you think?